OpenAI’s Prompt Design

この記事は公開されてから1年以上経過しています。情報が古い可能性がありますので、ご注意ください。

Introduction

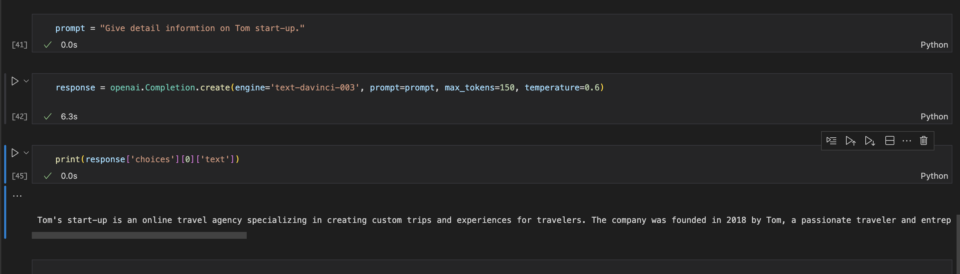

Issue: "Large Language Models(LLM) can HALLUCINATE....!! " In our current scenario, our LLM is GPT which when given a random prompt, can assume or hallucinate a situation that sounds real but is actually false or made up.

Example:

The response generated here is not real. This situation can be handled by a better prompt.

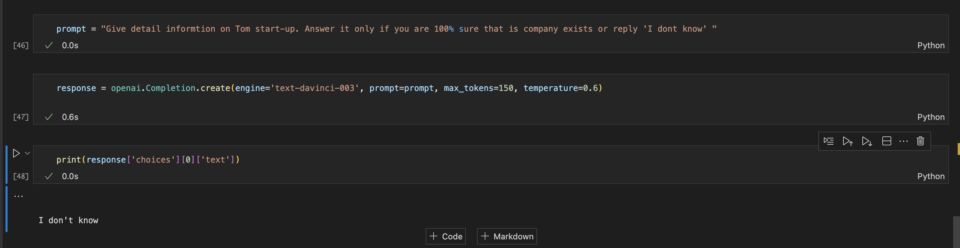

Example: add some constraint in the prompt to answer if it only is 100% sure of the result. Now my new prompt is added with some extra content: "Answer it only if you are 100% sure that is company exists or reply 'I don't know".

Now let's see how the API responds.

Did you see, it just said: "I don't know".

This is where we find the importance of giving the right prompt to the API. Language Models are capable of creating novel stories as well as doing intricate text analyses. Therefore, we must be specific when stating what we want because they can accomplish so many different things. An excellent prompt involves showing rather than just telling. There are no hard and fast rules for writing a prompt, that's what makes it challenging.

What's Needed in a Prompt

Let's discuss a few aspects to be included in a Prompt.

Instructions: Explain the aim of the prompt clearly, and use ### or “”” to separate out instructions from desired output. Be cautious not to include spelling errors or unnecessary information. While the model is typically able to detect simple spelling errors and respond, it might also assume that they are deliberate, which could have an impact on the response.Details: Give enough details for the expected response. Add if there are any specifications etc. For instance, rather than just saying "Tell a poem on Moon" say "Tell a haiku style three-line poem on Moon".Example: You can also add an example depicting your expected output. Let's say you need names in last name, first name format. You can add an example like "name -> last name, first name: Taro Suzuki -> Suzuki, Taro".Initiate a response: This is really useful for code completion scenarios. Just start off with the expected response. Ex: For a function to find the sum of numbers in Python, just say the expected response: "def sum(..."

Assume a prompt is like a mere explanation you provide your fellow mate. But also remember, it's not always about too many details, keep it simple and straight. At times, you might be misled with unnecessary information. Also, every word is counted into tokens, so use it wisely. Please refer original documentation for more details

Others

Other Parameters are our controllers to achieve the anticipated results.

- While choosing the

model/engine, always use the latest models. Understand the significance of each model with its costs. - Also use

temperatureandtop_pvariables wisely to control the scope of the response. Set them lower if you're asking it a question for which there is only one correct response. You could wish to raise them higher if you want to get more varied responses.

Thank you, Happy learning.